Britain's Battle with Deepfakes: Setting Standards in AI Detection

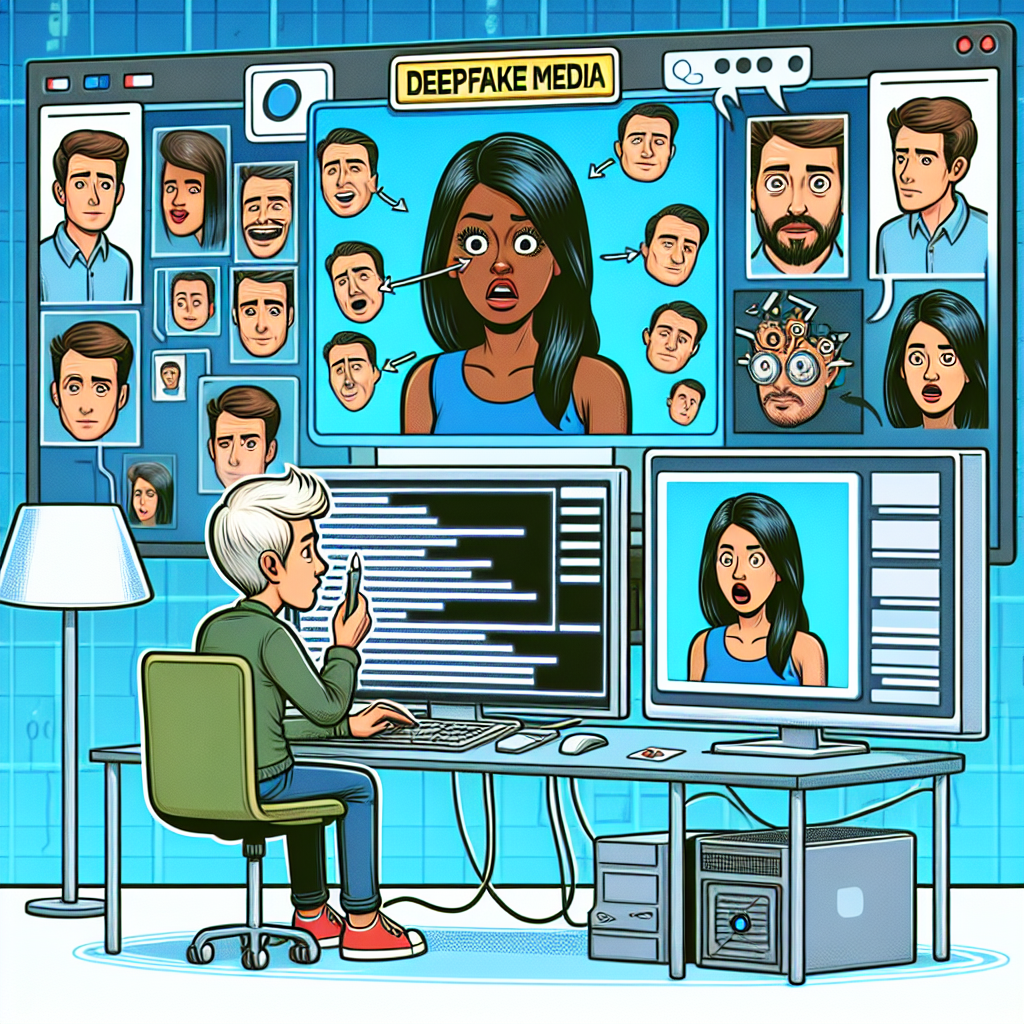

Britain collaborates with Microsoft and experts to create a framework for identifying deepfake content online, aiming to combat deceptive AI material. The government plans to establish detection standards to address the rise of harmful deepfakes, underscoring its commitment to safeguarding the public from fraud and exploitation.

- Country:

- United Kingdom

Britain is joining forces with Microsoft, academics, and experts to develop a system aimed at detecting deepfake content online, according to a government announcement on Thursday. This initiative seeks to establish standards for managing the dangers posed by harmful AI-generated material.

Marking a significant step, the UK recently criminalized the creation of non-consensual intimate images. It is now working on a deepfake detection evaluation framework to establish consistent benchmarks for assessing detection technologies. Technology Minister Liz Kendall remarked that deepfakes are being leveraged by criminals to defraud the public and exploit individuals.

The proposed framework will scrutinize how technology can be used to recognize and tackle harmful deepfake content. This initiative is all the more urgent, as reports indicate an estimated eight million deepfakes expected in 2025, highlighting the rapid growth from just half a million in 2023.

ALSO READ

-

SC modifies its January 2017 order, says former BCCI president Anurag Thakur free to take part in affairs of board.

-

Eaton Powers Up Aerospace Innovations at Singapore Airshow 2026

-

Thailand's Political Turbulence: A Chronicle of Coups and Leadership Changes

-

Eight injured in fire at factory in Chhattisgarh's Raigarh district: Police.

-

Oscar Piastri: Fair Play Amidst Formula One Drama