AI’s hidden weakness: Leading models struggle to collaborate effectively

The results revealed a startling phenomenon - the “collaboration gap.” Models that solved the maze easily on their own often failed when paired with an identical copy. In many cases, smaller or distilled versions of large models almost completely collapsed under collaborative conditions. This finding challenges assumptions that communication and coordination naturally emerge from intelligence.

A team of researchers from EPFL and Microsoft Research has discovered a striking weakness in today's most advanced artificial intelligence systems. The study, titled "The Collaboration Gap," reveals that even the most capable large language models (LLMs) struggle to work together effectively, a flaw that could stall the safe and scalable deployment of multi-agent AI systems.

The research evaluated 32 leading open- and closed-source AI models in both solo and collaborative tasks, introducing a new benchmark to measure how well agents communicate, align, and cooperate when solving problems under partial information. Despite remarkable individual reasoning ability, many models showed severe performance drops when required to collaborate with a copy of themselves.

When strong AI systems fail at teamwork

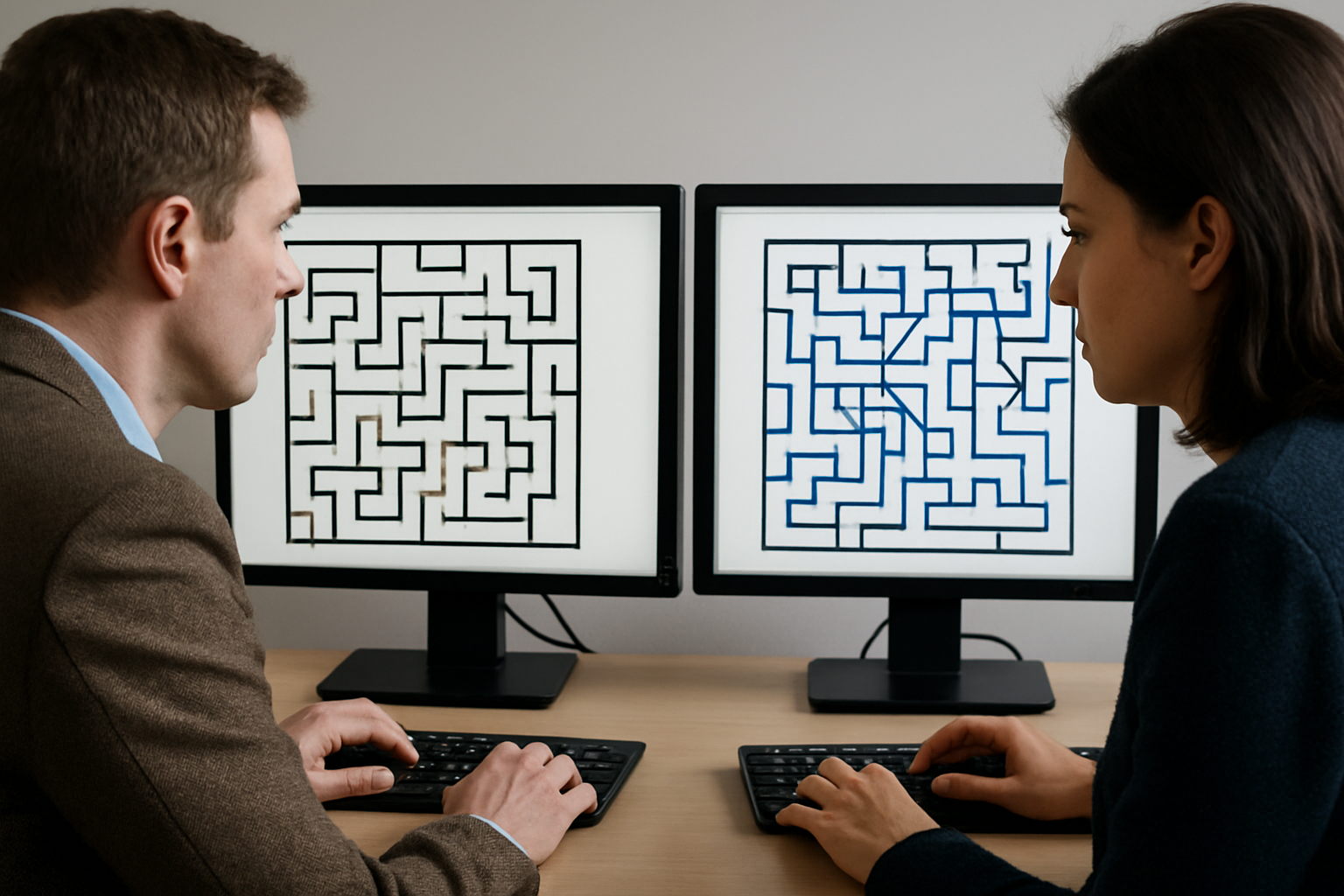

The authors designed a maze-solving benchmark to test how pairs of models interact when each holds only half of the information needed to find a solution. The experiment simulated real-world teamwork: each AI agent saw a different part of the maze and had to discuss moves turn by turn until they agreed on a path. A third model acted as an automated grader to assess whether the pair reached the goal successfully.

The results revealed a startling phenomenon - the "collaboration gap." Models that solved the maze easily on their own often failed when paired with an identical copy. In many cases, smaller or distilled versions of large models almost completely collapsed under collaborative conditions. This finding challenges assumptions that communication and coordination naturally emerge from intelligence.

According to the study, performance depended heavily on interaction order, which model initiated the conversation. When a stronger agent started the exchange, results improved sharply. Conversely, letting a weaker model lead often led to confusion and misalignment.

Relay inference: A new way to bridge the gap

To address the breakdown, the researchers proposed a strategy called "relay inference." The method allows a stronger model to "seed" the initial reasoning steps before handing over control to a weaker partner. This minimal priming produced substantial performance gains in both homogeneous and mixed pairings.

The team found that even a single early intervention from a capable model could boost collaboration outcomes. However, the benefit declined when weaker models dominated the early stages of communication, suggesting that first impressions in AI dialogue, much like in human interaction, set the tone for success or failure.

In experiments with heterogeneous pairs, such as OpenAI's GPT family, Google's Gemini, Anthropic's Claude, and xAI's Grok, researchers observed consistent ordering effects and cross-family dynamics. Performance rarely reached the level of the strongest solo performer. Surprisingly, even within the same model family, cooperative efficiency depended more on who started the dialogue than on model size or compute scale.

Collaboration as a distinct capability

The research delivers a deeper warning: collaboration is not an automatic by-product of intelligence. It is a distinct capability that current AI training methods do not adequately capture. The authors argue that improving collaboration should become a central design objective, on par with reasoning, creativity, and accuracy.

They highlight that many AI ecosystems, ranging from corporate assistants to autonomous agents, will soon rely on distributed systems where different models must share knowledge, negotiate uncertainty, and coordinate under partial visibility. Without reliable collaboration, such systems risk inefficiency, miscommunication, or failure.

The study further notes that distillation processes, often used to create smaller, faster models, appear to degrade collaborative competence disproportionately. While compression preserves factual knowledge, it may remove the subtle reasoning traits required for mutual understanding and grounding in dialogue.

Implications for the AI industry

The findings arrive at a pivotal moment, as companies race to build "agentic" AI architectures, systems made up of multiple specialized models interacting autonomously. Current frameworks, such as Anthropic's MCP, Google's A2A, and Besen's ACP, rely on predetermined communication protocols. The authors caution that such rigid designs will not scale to the open-world environments where flexible, real-time collaboration is needed.

The team's benchmark provides a measurable foundation for future research into AI–AI and AI–human cooperation. By quantifying the degree of collaborative degradation, it opens a new field of study that could reshape how next-generation AI systems are trained, evaluated, and deployed.

Moreover, the paper draws parallels to human teamwork, suggesting that collaboration failures in machines mirror social coordination challenges in people. Just as humans rely on shared conventions, grounding, and communication strategies, AI systems must develop similar mechanisms to function cohesively.

The researchers argue that overcoming the collaboration gap requires rethinking training paradigms, moving from individual optimization toward collective intelligence. This includes designing models explicitly for dialogue, role-taking, and shared representation, rather than expecting these abilities to emerge spontaneously.

- FIRST PUBLISHED IN:

- Devdiscourse