Quantum deep learning decades away from practical breakthrough

The authors point out that quantum computers are still plagued by high gate error rates, low qubit counts, and extremely slow operation times. Even when assuming optimistic scaling projections, quantum systems are roughly 10 trillion times slower than classical processors on realistic problem sizes. Under these constraints, many of the proposed algorithms would require problem scales beyond any practical application before surpassing traditional computing methods.

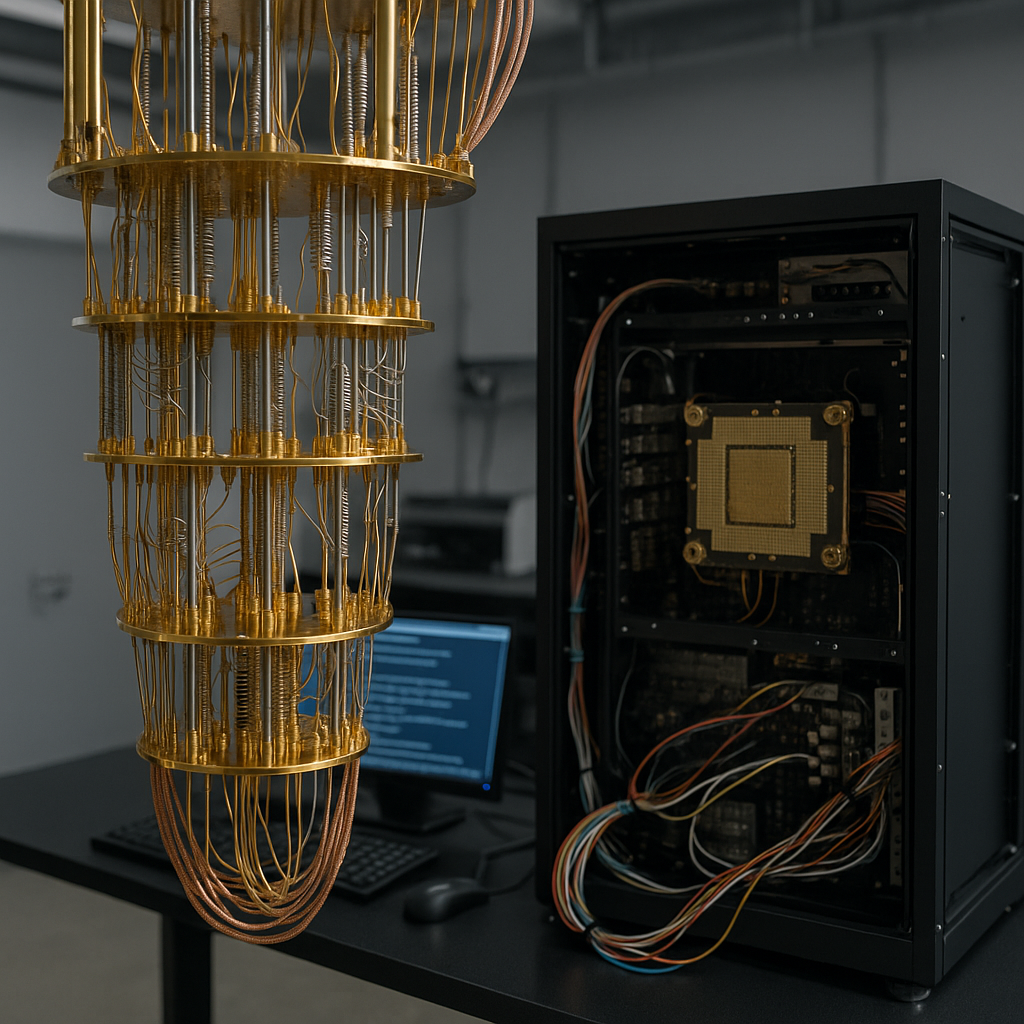

A new study on quantum computing applications in artificial intelligence reveals that current hardware and algorithms remain far from offering any practical advantage over classical machine learning systems.

The research, titled "Quantum Deep Learning Still Needs a Quantum Leap," published on arXiv, provides the most comprehensive assessment to date of when and how quantum processors could meaningfully outperform GPUs in deep learning tasks.

No imminent breakthrough for quantum machine learning

The study evaluates a broad range of proposed quantum algorithms applied to modern deep learning processes, spanning from neural network training and matrix operations to reinforcement learning and optimization. The findings are unequivocal: while some theoretical algorithms promise exponential or quadratic speedups, the overhead of current quantum hardware negates those benefits for the foreseeable future.

The authors point out that quantum computers are still plagued by high gate error rates, low qubit counts, and extremely slow operation times. Even when assuming optimistic scaling projections, quantum systems are roughly 10 trillion times slower than classical processors on realistic problem sizes. Under these constraints, many of the proposed algorithms would require problem scales beyond any practical application before surpassing traditional computing methods.

The team introduces a quantitative forecasting model, Quantum Economic Advantage (QEA), to determine when quantum machine learning could become economically competitive. According to this model, a quadratic-speedup algorithm like Grover's search would only outperform a classical system once problem sizes exceed 10²⁶ elements, a computational scale that remains unattainable even with rapid quantum hardware development.

The illusion of near-term quantum advantage

Despite extensive research activity in the field, the study argues that much of the optimism surrounding quantum deep learning is based on asymptotic theory rather than practical performance metrics. Many algorithms rely on unrealistic assumptions such as fault-tolerant quantum random access memory (QRAM) or noise-free qubit interconnects. The authors note that these components are as technologically challenging as the quantum computers themselves and are unlikely to materialize in the near future.

The analysis covers key components of deep learning pipelines, matrix multiplication, attention mechanisms, optimization routines, and neural network training loops, to evaluate where quantum speedups could theoretically emerge. Even in the most favorable cases, the authors find no evidence of meaningful acceleration without fundamental breakthroughs in both quantum hardware and software design.

For instance, quantum algorithms for matrix multiplication and matrix-vector operations, which lie at the heart of deep learning, offer theoretical speedups but require enormous data transfer costs between classical and quantum memory. Likewise, variational quantum neural networks (QNNs), often cited as a promising NISQ-era approach, remain unstable due to "barren plateau" problems, where gradients vanish during training.

The research also scrutinizes claims around quantum-enhanced reinforcement learning and Grover-type search accelerations. While these techniques yield mathematical improvements under ideal conditions, their implementation on realistic quantum devices results in slower execution times and higher energy costs than optimized GPU-based solutions.

Hardware, algorithms, and the road to real advantage

The authors outline a roadmap of conditions under which quantum deep learning could eventually deliver results. These include significant progress in fault-tolerant error correction, hardware speed, qubit coherence, and the development of genuinely quantum-native learning architectures that do not simply replicate classical neural networks in quantum form.

The study identifies three main bottlenecks preventing progress:

-

Limited asymptotic advantage: Quantum algorithms designed for linear algebra, search, or sampling tasks generally provide only polynomial improvements, which are insufficient to overcome massive constant overheads.

-

Infeasible memory models: Many of the most promising quantum algorithms depend on QRAM, a component that current technologies cannot realize efficiently.

-

Narrow problem applicability: Algorithms that theoretically outperform classical methods often apply to highly constrained or artificial problem classes, with little relevance to today's large-scale AI systems.

Nevertheless, the researchers state that the field still holds potential in the long term, especially if hardware development accelerates or if new architectures, such as photonic quantum processors, achieve significant speed and stability gains. They note that quantum approaches might eventually excel in specialized domains like quantum chemistry, secure data sharing, or stochastic sampling, rather than general-purpose deep learning.

A realistic horizon for quantum AI

While many companies and research institutions have invested heavily in quantum machine learning initiatives, the authors argue that expectations should shift from immediate breakthroughs to long-term foundational research. Rather than racing to build quantum analogues of existing neural architectures, the paper recommends focusing on hybrid quantum–classical workflows, error-resilient algorithms, and quantum-inspired classical methods that can deliver incremental progress in the near term.

Importantly, the authors highlight that classical computing continues to advance at a faster pace than quantum hardware, especially with the emergence of specialized accelerators, low-precision training techniques, and distributed GPU clusters. As a result, the benchmark for achieving "quantum advantage" in AI keeps moving further away.

The researchers call for the establishment of task-level benchmarking standards and open databases that evaluate algorithms under realistic assumptions, instead of idealized theoretical frameworks. They also urge the community to adopt transparent reporting on qubit error rates, gate times, and cost efficiency to ground discussions in measurable realities.

- FIRST PUBLISHED IN:

- Devdiscourse